Automation Without Execution Isn’t a Silver Bullet

A Comment Got 6,000 + Impressions

Last week I commented on a LinkedIn post about AI and predictive maintenance: “Fair evaluation of the problem but the solution is nowhere near.”

I don’t usually adopt a combative stance but I got 6000 impressions. More engagement than most carefully crafted posts, playing by the book

Why? Because everyone in O&M is thinking it while sitting through vendor demos promising autonomous operations, or flushed with Linkedin ads.

It’s not secret, I use AI daily. Heavily. Workflow automation, document analysis, research, content generation. I handle roughly three times the workload I managed five years ago. Same hours, better quality. This article? AI helped draft it. Then I fixed it, because it’s my name on the line after all.

I’m not anti-AI. I’m anti-bullshit. And this new shinny toy breeds BS like mushrooms after the rain.

The challenge with automated analytics and predictive maintenance is to reduce ticket inflation and facilitate getting quality work done within SLAs. Not generate 300 alerts weekly that drown your team. Most of the time it fails.

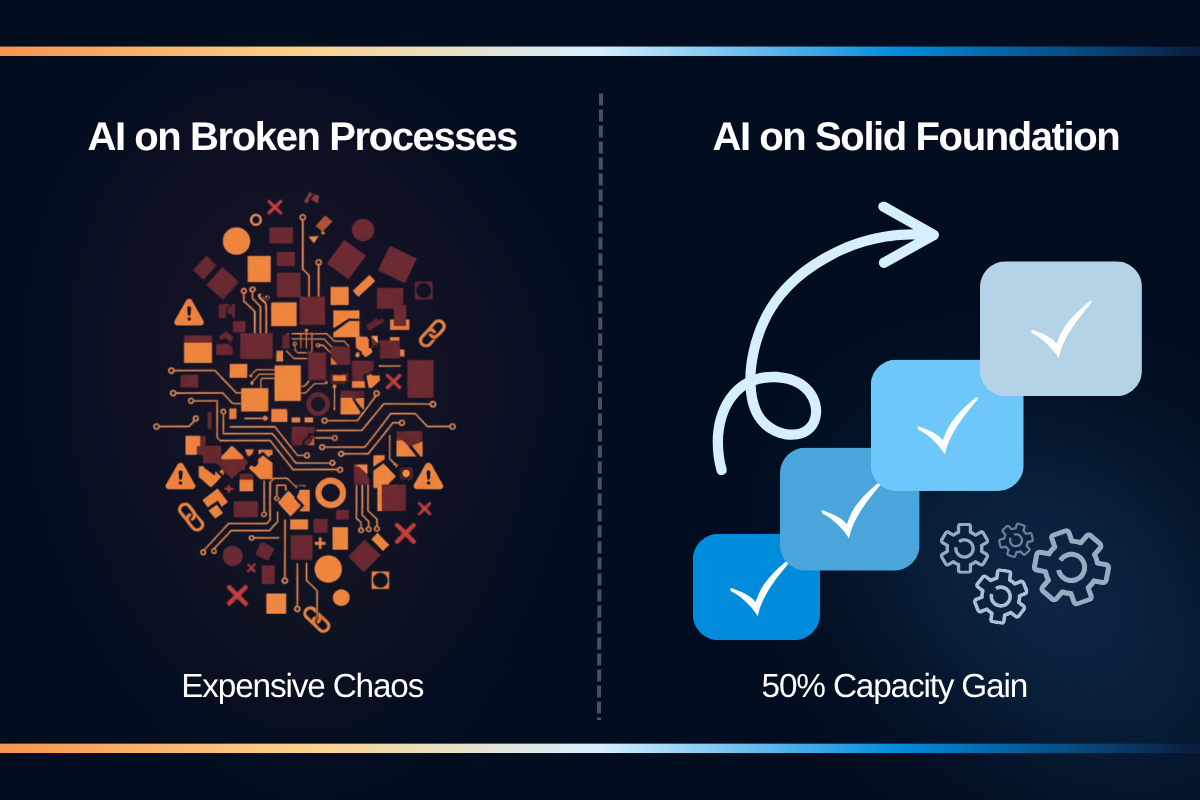

Some Common sense: AI deployed on broken processes is expensive chaos. AI on healthy processes can increase your service capacity by roughly 40-60%. That’s doing almost having another team part time.

But the industry keeps spending millions on AI detection while technicians drive 45km with the wrong parts because nobody fixed the basic workflow problems first.

Subscribe if you’re tired of hype in solar operations that ignores operational reality. I write about what works, not what gets applause at conferences. (although I also speak at conferences)

Why I Can Critique This (As Someone Using AI Daily)

I’m not selling copy-paste AI systems. I’m using them and helping you adopt them.

Solar Notions daily work runs on AI: workflow automation, document and data analysis, research synthesis, content generation. What took 3-4 hours takes 20 minutes of review. AI assembles, does the grunt work, I analyze and decide.

Result: Three times the throughput. Same working hours. No burnout.

So yes, I’m for AI automation. But with surgical precision. Otherwise you’re

The goal isn’t replacing humans. It’s eliminating the repetitive nonsense that stops skilled people from doing actual work—troubleshooting complex problems, making judgment calls, solving things that need expertise.

Making a small parallel with a famous post where someone was saying that we need AI to do the dishes, take out the trash etc., not handle creative work, research etc. Same applies for solar operations. Most people are passionate about it, they love the thrill of a detective style investigation with troubleshooting and root cause analysis etc. AI needs to remove what’s in the way of people achieving excellence in what they do.

As I experience this relief daily, more and more as systems get advanced and better integrated, I can separate what works from what’s vendor dog and pony show.

The Three-Stage Service Pipeline (Often Overlooked)

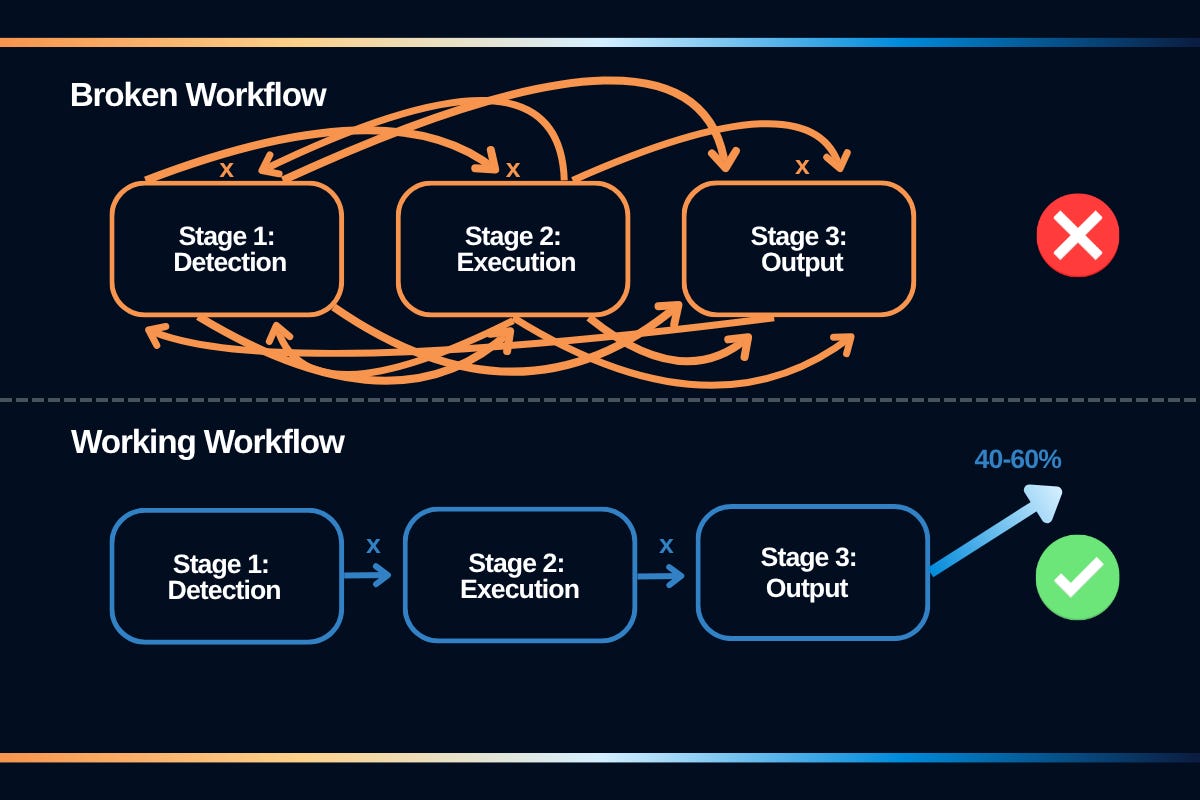

Operations work as a service pipeline with three stages . It’s overly simplified but accurate enough. Most AI deployments focus on Stage 1 while Stages 2 and 3 burn.

What’s typically not explained enough: AI applied correctly at each stage on solid processes creates compound effects. That service capacity improvement comes from multiplication across the pipeline, not magic algorithms.

Only works if your plant is decent quality, your processes function, AND your tech stack is actually interoperable. I’ve debugged enough operations to know: a monitoring system that can’t seamlessly feed your work order system kills efficiency faster than any process problem. Otherwise AI just compounds dysfunction faster—or gets throttled by your overpriced, non-communicating infrastructure.

Let’s look at the realistic numbers for solar O&M and Technical Asset Management:

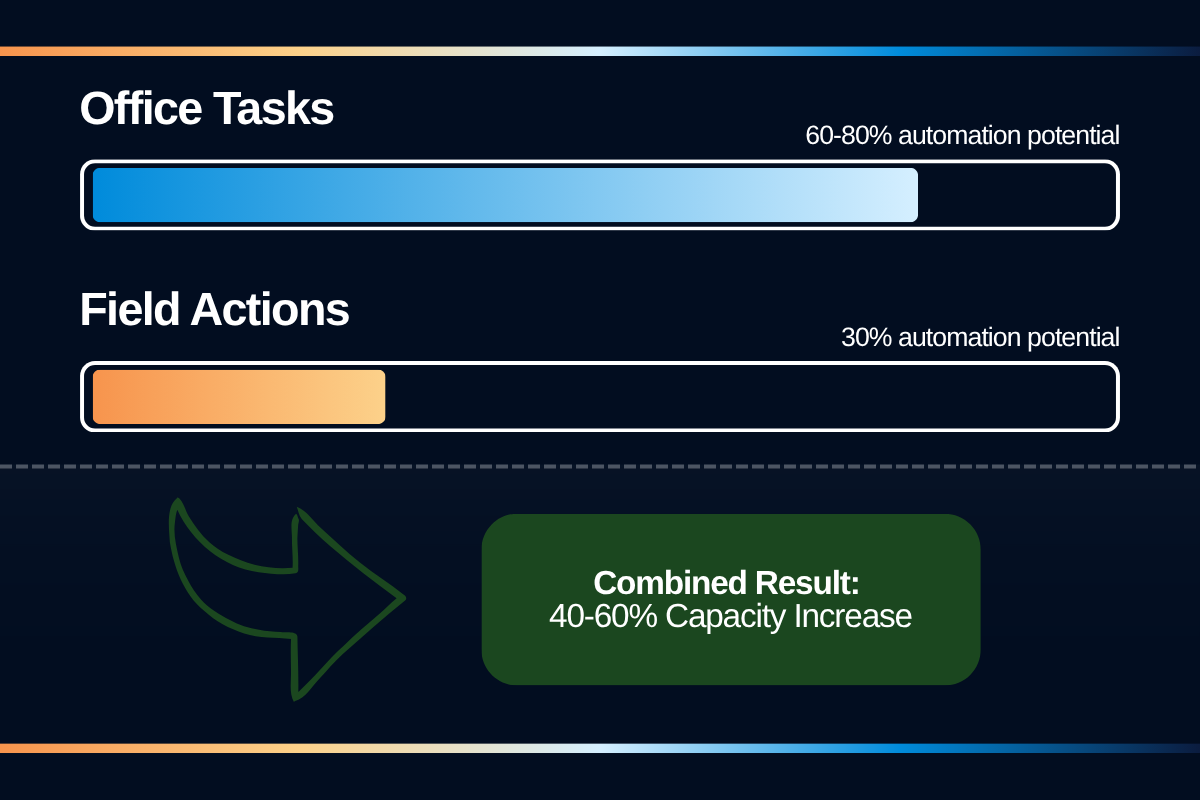

Office-based tasks (documentation, reporting, coordination, analysis, planning): 60-80% automation potential with AI Field actions (physical troubleshooting, measurements, repairs): 30% automation potential

When you do the math properly across the service pipeline, you’re looking at roughly 50% service capacity increase (1.5x) through efficiency gains. Not the 3x vendors promise. But 50% is substantial when achieved sustainably for a sector with small margins aka low OPEX budget.

Stage 1: Detection and Input Efficiency

Every vendor sells better detection. AI anomaly detection, predictive failures, thermal imaging, string analytics.

Promises:

Detect problems before failures

95% accuracy

Predict failures days ahead

Reduce downtime

Reality on broken processes:

80 “anomalies” per site monthly

65 are false positives from garbage baselines

Teams spend 4 hours daily chasing normal variations

Real problems disappear in noise

A 200MW operator spent €180K on AI monitoring while their maintenance workflows fell apart. Three months later: ticket backlog from 47 to 312, resolution time from 3.2 to 8.7 days, two senior technicians quit, system shut down.

The AI worked fine. It detected everything. The operation couldn’t handle what it detected.

On solid foundation: Detection becomes genuinely useful. False positives drop dramatically. Team focuses on real problems. AI handles pattern recognition, humans handle exceptions.

Same way I use AI for data analysis—process high volumes to identify what needs human attention, not replace judgment.

I do have to emphasize that this particular part of Operations has matured a lot in the past years, especially since it’s software driven. So we’re looking at good options on the market with contenders coming in.

My personal take is detection is underused because it’s poorly integrated and because operators are not trained yet to handle such complexity.

Stage 2: Execution and Service Throughput

This is where the limitations become clear and where AI hype ignores physics.

Even with perfect detection:

Technician drives 45km or more to site

Physical troubleshooting crawling under the modules

Hands-on repair in the cold or heat

Parts take 2-3 days if you’re lucky

Field actions represent roughly 50-60% of O&M work and only have 30% automation potential by 2030 according to Becquerel Institute. You can optimize routing, pre-populate diagnostics, and reduce admin overhead. But someone still needs to drive there and fix the physical problem.

Where AI actually helps: Office-based execution tasks (60-80% automation potential):

Auto-triage by severity

Pre-populate work orders with diagnostic context

Route optimization

Automate status updates and documentation

Schedule optimization

Computer vision to text to capture photos and videos

Audio to text in order to fill the ticket - it’s nasty with dirty hands to type

This is workflow automation done right. AI handles repetitive information assembly, media conversion and, humans focus on execution.

The compound effect: When detection is accurate AND office-based execution tasks are automated:

Technicians arrive with right parts

Diagnostic context cuts troubleshooting time

First-time fix rate improves

Admin overhead drops

One distributed operator implemented AI routing and work order automation on solid workflows. Same team, same sites. Service capacity increased roughly 40% because technicians stopped wasting time on admin overhead and coordination.

Stage 3: Output and Strategic Service Optimization

Existing tools without AI already deliver real-time reports, data validation, client updates. Your SCADA, monitoring platform, reporting systems handle this today.

What AI enhances:

Speed: Reports in seconds vs hours

Volume: Insights across hundreds of sites simultaneously

Adaptation: Same data tailored for different readers—technical for O&M, executive for asset managers, compliance for regulators

Where I use AI extensively. Not replacing analysis, accelerating and adapting it.

Example: 15 underperforming strings across 8 sites.

Without AI: 3-4 hours manual report, one format

With AI: Draft in 30 seconds from raw data, multiple formats for stakeholders, human validates and adds context, delivered while problem gets fixed.

I do this regularly. AI drafts from data, I validate and refine. Half a day becomes 30 minutes.

If we look at the compound effect across all stages:

Accurate detection (Stage 1) → Efficient execution with automated office tasks (Stage 2) → Fast adapted output (Stage 3)

Result: Asset managers see problems and resolutions before metrics suffer. Field teams solve more with less waste. Planning improves because data is accurate and timely.

Realistic overall improvement: 40-60% service capacity increase depending on your office vs field task ratio.

That’s meaningful. But only if your processes can support it.

The Foundation (Typically Skipped or Underestimated)

Getting back to what triggered the comment - predictive maintenance. Before deploying predictive maintenance which is an AI application: get commissioning, corrective maintenance, and preventive maintenance working.

Not exciting. Can’t press release it. Won’t get conference invitations. Borderline grunt work.

But it’s reality. And I say this while using AI tools extensively to multiply my own productivity.

Teams skip this because vendors seduce them. “Our system learns from your data and improves over time.” Sure. Learns from garbage data, gets excellent at predicting garbage outcomes, you end up with the headaches of burnt out team for nothing.

As i experienced it several times when using AI for data analysis daily. Can confirm: garbage in, garbage out. AI amplifies whatever you feed it. Clean data with solid processes? Multiplies capability. Messy data with broken workflows? Multiplies problems.

Example: 300MW operator deployed predictive analytics with chaotic corrective maintenance. AI predicted 83% of failures correctly.

Average prediction to repair: 11 days. Most failures occurred before day 11.

Perfect predictions. Useless outcomes. €200K wasted.

Can’t compound gains when Stage 2 can’t respond with capacity to Stage 1’s predictions in depth and volume.

What needs working first:

Commissioning:

Equipment setup to standards

Devices documented with actual specs

Performance expectations baseline under measured conditions

Electrical and Data mapping matching reality, not outdated drawings

The right tech stack:

Hardware and software that are actually interoperable

Systems that communicate seamlessly, not through endless middleware patches

SCADA, monitoring platforms, and data systems that speak the same protocols

Without this, your tech stack becomes an overpriced bottleneck no amount of AI can fix

Corrective maintenance:

Clear escalation paths

Standardized diagnostics

Root cause analysis

Documentation helping next person

Trained team

Geographic distribution of capacity

Preventive maintenance:

Schedule based on actual wear patterns

Executable procedures

Working parts inventory

Metrics proving prevention

Get these working across all three stages. Then AI amplifies each AND creates compound effects.

How I work: foundation first, AI with precision second, results a natural third.

Share with your O&M team—especially if they’re being pressured to “deploy AI” without fixing basics first.

Some Real Numbers in Practice

Operator A (150MW, solid processes):

Before: 4 technicians, 180 tickets/month, 92% SLA

Applied AI surgically across office tasks and workflow optimization

After: 4 technicians, 270 tickets/month, 96% SLA

Result: 50% capacity increase, same headcount

Operator B (200MW, broken processes):

Before: 5 technicians, 160 tickets/month, 78% SLA

Deployed vendor AI solution on dysfunctional workflows

After: 5 technicians, 380 tickets/month, 64% SLA

Result: worse SLA, two technicians quit, chaos

Same category of technology. Opposite outcomes.

Not the AI. Surgical precision on solid foundation versus generic deployment on dysfunction.

I see this pattern constantly. Technology is almost never the limiting factor. Process maturity determines success or failure.

Field Work Through 2030 (The Part Usually Avoided in Pitches)

Detection will be fantastic. AI identifies problems 95% accurately. Drones scan automatically in residence. Satellites and sensors track everything.

Still need humans for:

Troubleshooting the loose connection three panels upstream causing “inverter fault 437”

Measuring to verify IR anomaly isn’t shadow

Tightening connections, replacing combiners, re-terminating cables

Physical work needing hands, tools, judgment

Detection automated. Execution isn’t.

This is the 30% automation ceiling for field actions. Every honest person knows this. But AI pitches skip it conveniently.

What O&M Companies Actually Need

Fair evaluation: Poor documentation, inconsistent processes, reactive maintenance, skills gaps.

Solution: Not sexy. Not demo material. But works. Long term.

Build foundation:

Stage 1: Accurate baselines, configured equipment, clean data

Stage 2: Standardized procedures, trained teams, working parts inventory

Stage 3: Documentation systems, clear communication protocols

Then apply AI surgically:

Focus on office-based tasks (60-80% automation potential):

Automated report generation and adaptation

Intelligent triage and routing

Documentation and status updates

Data analysis and pattern recognition

Accept field task limitations (30% automation potential):

Diagnostic support for faster troubleshooting

Optimized routing to reduce drive time

Better coordination reducing delays

Result: 40-60% service capacity increase, relieved team, same headcount.

Without solid processes, AI can’t create compound gains. It compounds dysfunction.

The Path Forward

Do we avoid AI in O&M? → Absolutely not. We embrace it smartly

The technology works. Many use it daily to multiply productivity in some way. But it’s an amplifier, not a solution.

If your processes are solid, AI applied with smartly can increase your service capacity . That’s meaningful. That’s sustainable. That’s worth investing in.

If your processes are broken, AI will compound your problems faster than you can fix them. That €200K system becomes an expensive lesson in why fundamentals matter.

The choice is simple but not easy: Fix the foundation first. Then leverage AI in a smart way to compound your gains.

Or ………skip the foundation and watch AI amplify your dysfunction at scale. Your call.

For those willing to do the unglamorous work of getting processes right first—the opportunity is substantial. You’ll pull ahead of competitors chasing AI magic while their operations burn. You’ll build teams that can actually execute instead of drowning in tickets. You’ll create the foundation for continuous improvement rather than expensive failed deployments.

The industry needs more operators who understand this. Who can judge what’s real and tangible from commercial utopia. Who build systems that relieve good people from repetitive work so they can apply expertise where it matters.

That’s the conversation worth having.

Your turn:

What’s your experience with overhyped AI solutions or implementations in solar?

Drop your experience in comments. The industry needs honest conversations from practitioners about what actually works, not just what demos well.

Subscribe for insights on applying AI with surgical precision to boost productivity—from someone using these tools daily, not selling them.